Telecom’s race toward a single “Network Foundation Model” and GPU-powered AI-RAN sounded bold because a unified AI brain and monetized edge silicon promised simplicity, speed, and new revenue, yet the pitch glossed over a stubborn truth about networks: plausible is not good enough when physical laws and standards decide whether voice connects or handover succeeds. Enthusiasm blended creative-language breakthroughs with deterministic control, assuming a general-purpose model could span reflex and reasoning while GPUs pulled double duty as cell-site radios and side hustle servers. The result was an alluring narrative—and a fragile one. What stands out instead is a more grounded path: use language models where intent and planning matter, use small deterministic tools where precision and timing rule, and stop counting on idle RAN cycles that peak when customers want AI the most.

Physics vs. Probability: Why LLMs Don’t Run Networks

Large language models are expert guessers trained to produce statistically coherent continuations, a skill that fits creative assistance and dialog but collides with the physics-bound determinism of radio. RF front ends, HARQ timing, beam management, and scheduler commits do not reward “close enough”; they demand microsecond consistency and observable guarantees. Collapsing real-time Layer 1 reflexes and higher-layer reasoning into a single “God Model” stretches both domains until neither is well served. Latency increases, jitter grows, and the same model that crafts plans may fabricate parameters. A mis-set power control target is not a near miss; it is a coverage hole. Safety-critical loops favor compact, verified algorithms or tightly scoped models with bounded behavior, while flexible reasoning belongs upstream where errors are caught by guardrails before any command touches the network.

The gap widens when protocol obligations meet probabilistic inference. Standards encode explicit state machines and timers that leave little room for ambiguity, and vendor interoperability assumes determinism down to message fields. An LLM can map intents to candidate actions, but treating its token prediction as a control policy risks silent failure modes and hard-to-reproduce bugs. Even retrieval augmentation and structured output help only so far, because radio timing cannot wait for multi-hop reasoning or uncertain confidence thresholds. A better division recognizes two tempos: reflex at the edge of physics with small, testable models and fixed-function blocks; reasoning at management layers, where an LLM can translate human language into structured plans without touching knobs directly. That separation respects real constraints while preserving room for adaptable operations.

The Drift Tax of Monoliths

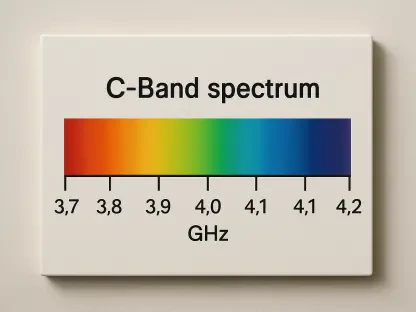

Networks change constantly, and those changes do not politely line up for quarterly model updates. New spectrum lights up, features toggle, antennas rotate, vendors swap, traffic shifts, and policies evolve. When a single high-parameter model entangles domains from RAN and Core to Transport and Billing, small changes create wide regression surfaces. Tuning one behavior can derail another, introducing cascading test burdens. Fine-tuning looks easy on paper yet triggers catastrophic forgetting or domain interference, forcing expensive, repeated validation. That becomes a drift tax: pay in compute and people to requalify a monolith after every meaningful change, or accept stale behavior that misses the network’s new reality. Either way, the dream of lower operating expense slips as entropy accumulates inside the centralized brain.

A modular approach reduces the blast radius of change. Instead of imprinting the entire network’s knowledge into a single artifact, keep domain knowledge in small, replaceable tools with documented inputs, outputs, and guarantees. When a vendor swap adds new counters or a policy revises QoS classes, update the relevant tool and its tests, not the whole model zoo. The orchestrator—an LLM or similar planner—stays stable as a translator of intent, while execution logic lives in deterministic components that can be versioned, rolled back, and audited. This mirrors how large networks already manage risk: isolate, instrument, and certify. Crucially, it also keeps compute budgets sane, since validating one tool’s behavior is cheap compared with revalidating a giant representation that entangles unrelated functions.

AI-RAN’s Correlation Fallacy

The economic story behind AI-RAN leaned on a tidy curve: GPUs at cell sites would process baseband in peak hours and sell spare cycles for AI inference off-peak, unlocking new revenue from the same silicon. Reality breaks the symmetry. Consumer network demand and consumer AI demand both crest in the evening, when video, gaming, and assistants converge. The very hours when AI would pay best are the hours radios need undivided compute. What remains are overnight windows that look like spot markets, with thin margins and volatile utilization. Meanwhile, the operator shoulders scheduling complexity across mixed-criticality workloads, where a mispredicted queue can degrade RF performance and turn dropped packets into dropped calls. That asymmetry leaves capital stranded and business cases stretched.

Even in enterprise scenarios, shared silicon introduces brittle trade-offs. Hard real-time radio workloads demand predictable latency and power envelopes, while opportunistic inference thrives on batchability and throughput. Packing them together raises the risk of priority inversions and thermal throttling at awkward moments. The control software becomes a tightrope act, juggling SLAs for voice and AI tenants with little upside beyond marginal revenue from low-value cycles. A simpler approach is to decouple. Run RAN workloads on power-efficient, deterministic silicon sized to radio needs, and deploy separate AI servers where a customer demonstrably requires low latency—factories, venues, or private campus networks. This keeps each system predictable, keeps capacity planning honest, and prevents a scheduling problem from becoming a service outage.

Agentic Architecture and Hardware Strategy

A practical end-state looks agentic: a general-purpose LLM orchestrator for intent, task decomposition, and change explanation, paired with a toolbox of deterministic executors that do the real work. The orchestrator receives a plain-language goal—add capacity on a congested sector, test a new handover threshold, or generate a maintenance plan—and compiles it into structured actions. Those actions call narrow tools: verified SQL over telemetry stores, physics simulators for RF what-ifs, rule engines with formal constraints, or compact ML models like XGBoost tuned for specific chipsets. Tools return explicit success or typed errors, enabling the orchestrator to back off, seek approvals, or propose alternatives. This fail-safe posture beats “plausible” automation because mistakes surface as errors rather than silent misconfigurations.

Hardware should mirror that separation. Radios thrive on dedicated, power-efficient silicon—specialized ASICs and modern CPUs such as Marvell platforms, ReefShark, or Granite Rapids—because their energy profiles and determinism suit real-time demands. AI belongs on separate servers chosen for the workload: high-throughput inference in the core, low-latency stacks at the enterprise edge when use cases justify it. This partitioning reduces vendor lock-in, eases supply risk tied to premium GPUs, and clarifies capacity planning. It also aligns incentives: RAN planners size for coverage and throughput, while AI teams size for model latency and concurrency. By avoiding shared compute between radio and general AI, operators gain simpler safety cases, steadier power budgets, and clearer cost attribution.

Operating Model and Industry Trajectory

Operating the agentic stack requires disciplined change control without heavy drag. Each tool carries its own CI/CD, benchmarks, and guardrails, allowing quick qualification when equipment evolves or policy shifts. Telemetry flows back into a provenance layer that ties outcomes to inputs, versions, and approvals. The orchestrator adds explainability by logging the plan it compiled, the tools it invoked, and the checks it passed or failed. This structure shortens validation cycles and sharpens audits. Importantly, operators can phase adoption: start with read-only advisors and simulation-in-the-loop, graduate to closed-loop actions on low-risk domains, and expand as confidence grows. Governance becomes productized rather than a spreadsheet ritual.

Industry sentiment has been drifting toward this modular realism. Critical infrastructure teams across sectors have dialed back bets on monolithic foundation models for control, favoring systems with explicit safety boundaries and layered time scales. The shared GPU story has been reassessed as demand data exposed correlated peaks and low off-peak yields. In that light, telcos had clearer next steps: stop chasing a single brain, retire assumptions about GPU monetization at cell sites, and build an agentic toolbox that cleanly separates reasoning from execution. The trajectory pointed toward smaller, verifiable models at the reflex edge, orchestrated by language systems that handle intent and accountability. Capital plans and operating models followed those contours, and the sector looked better for it.