The staggering volume of data now being generated by billions of interconnected devices is placing an unprecedented strain on traditional, centralized cloud infrastructures, exposing critical performance limitations that threaten the progress of real-time applications. The conventional model, in which data must traverse vast networks to reach a distant data center for processing, introduces significant delays—or latency—that are simply unacceptable for technologies like autonomous vehicle navigation, remote surgical robotics, and dynamic smart grid management, where split-second decisions are paramount. This fundamental bottleneck has necessitated a radical rethinking of network architecture, sparking a paradigm shift away from the centralized cloud and toward a more distributed and intelligent model. By integrating the principles of fog computing, which brings computational power closer to the data source, with the modular agility of microservices, a new framework has emerged. This innovative approach promises to forge a more responsive, efficient, and secure Internet of Things ecosystem capable of supporting the next wave of technological innovation.

The Architectural Shift to the Network Edge

Fog computing represents a strategic evolution in network design, introducing a crucial intermediate computational layer that resides between the multitude of IoT devices at the network periphery and the powerful, centralized cloud. This layer is not a monolithic entity but rather a distributed network of fog nodes—devices such as gateways, routers, switches, and local servers—that are geographically positioned in close proximity to where data is generated and acted upon. By distributing computation, storage, and networking services across this architectural continuum, the fog model fundamentally redesigns the data processing pipeline. Instead of funneling all raw data to a distant cloud for comprehensive analysis, it enables significant processing to occur locally at the edge. This decentralization is pivotal for filtering, aggregating, and analyzing time-sensitive information immediately, reserving the cloud for more complex, long-term analytics and large-scale data storage. This tiered structure effectively cultivates a more intelligent, efficient, and balanced system architecture.

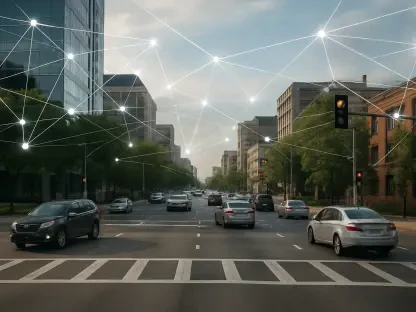

The most immediate and transformative benefit of adopting a fog computing architecture is the dramatic reduction in network latency, a critical factor for a growing class of mission-critical IoT applications. For these systems, the round-trip time for data to travel from a device to the cloud and back is a non-negotiable performance metric. Consider industrial automation, where robotic arms on a factory floor must react instantly to sensor inputs to avoid costly errors or severe safety hazards. Similarly, in the realm of connected vehicles, vehicle-to-everything (V2X) communication requires near-instantaneous data exchange to prevent collisions and manage traffic flow effectively. Remote healthcare applications, such as telesurgery and real-time patient monitoring, demand the same level of responsiveness to allow a surgeon to operate with precision from miles away. In all these high-stakes scenarios, the delays inherent in a purely cloud-centric model are not just inconvenient; they are prohibitive. By processing data locally within the fog layer, these systems can achieve the ultra-low latency required for true real-time operation.

A New Blueprint for Service Deployment

While fog computing provides the foundational infrastructure for edge processing, its true potential is unlocked through the strategic deployment and management of microservices. This modern software development approach involves breaking down large, monolithic applications into a collection of small, independent, and loosely coupled services, with each one responsible for a single, specific business function. This inherent modularity offers immense flexibility, allowing developers to update, scale, and manage individual components without disrupting the entire system. However, in a distributed fog environment, the critical challenge becomes determining where each microservice should reside for optimal performance. The solution lies in a structured, intelligent placement strategy guided by a comprehensive taxonomy. By classifying microservices based on their unique computational requirements, data dependencies, security needs, and latency sensitivity, system architects can create a detailed blueprint for their deployment. This transforms the process from guesswork into a deliberate, optimized methodology.

The dynamic and often unpredictable nature of the IoT landscape demands an architecture that is not only efficient but also highly adaptive and resilient. A static deployment of microservices would quickly become suboptimal as network conditions fluctuate, user demands shift, and new devices join the ecosystem. Consequently, an agile framework must be implemented, one that leverages intelligent algorithms to continuously monitor the environment and dynamically adjust microservice placement and resource allocation in real time. This involves sophisticated mechanisms for service discovery, enabling services to locate and communicate with each other seamlessly across the distributed network. It also requires advanced data management protocols that can intelligently route information to the optimal processing location based on current network traffic and workload. This inherent agility ensures that the IoT system remains robust and maintains peak performance, automatically adapting to changing operational parameters to deliver a consistently high-quality user experience without requiring manual intervention.

Fortifying the Foundations of a Connected Future

In an increasingly interconnected world, security remains one of the most significant challenges for IoT deployments, as every connected device represents a potential point of vulnerability for cyber threats. A purely reactive approach to security is no longer sufficient to protect against sophisticated attacks. The integrated fog and microservice architecture addresses this challenge head-on by championing a “security-by-design” philosophy. Instead of treating security as an afterthought or a separate layer to be added on, this model embeds robust security measures directly into the fabric of the system from its inception. This is achieved by implementing layered security protocols at various points of service interaction, from the device level to the fog nodes and all the way up to the cloud. Each microservice can be equipped with its own authentication and authorization controls, creating isolated compartments that effectively limit the potential impact of a breach. This proactive and integrated approach ensures that data integrity, confidentiality, and user privacy are safeguarded throughout the data lifecycle.

The strategic fusion of fog computing and microservices marked a turning point in the evolution of the Internet of Things, providing a definitive solution to the critical challenges of latency and scalability that had previously constrained its potential. This architectural paradigm effectively dismantled the performance bottlenecks of centralized cloud models by distributing computation to the network edge, closer to where data was created and needed. The development of a sophisticated taxonomy for microservice placement proved to be a foundational step, as it transformed deployment from an ad-hoc activity into a precise, strategic process that maximized system efficiency and responsiveness. This intelligent framework delivered a harmonized ecosystem where the interplay between physical hardware and modular software was seamless and efficient. Ultimately, this integrated approach established a new blueprint for building resilient, secure, and self-adapting systems, laying the groundwork that spurred subsequent interdisciplinary research and innovation.